For my personal learning and understanding, I will slowly go through the linear regression cost function very slowly. So that I understand exactly how it works. My hopes it that this little exercise will cement my understanding, or, improve my intuition of this function a little bit more.

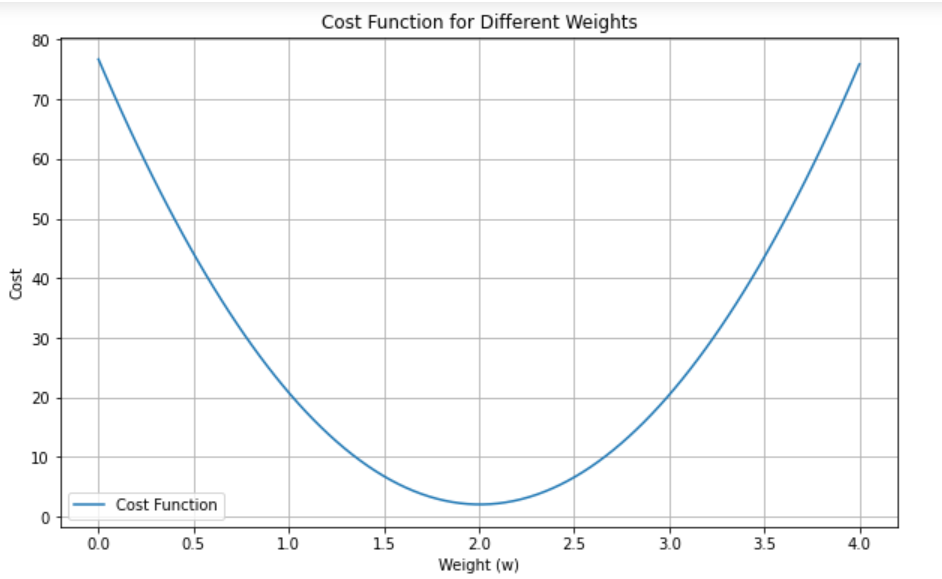

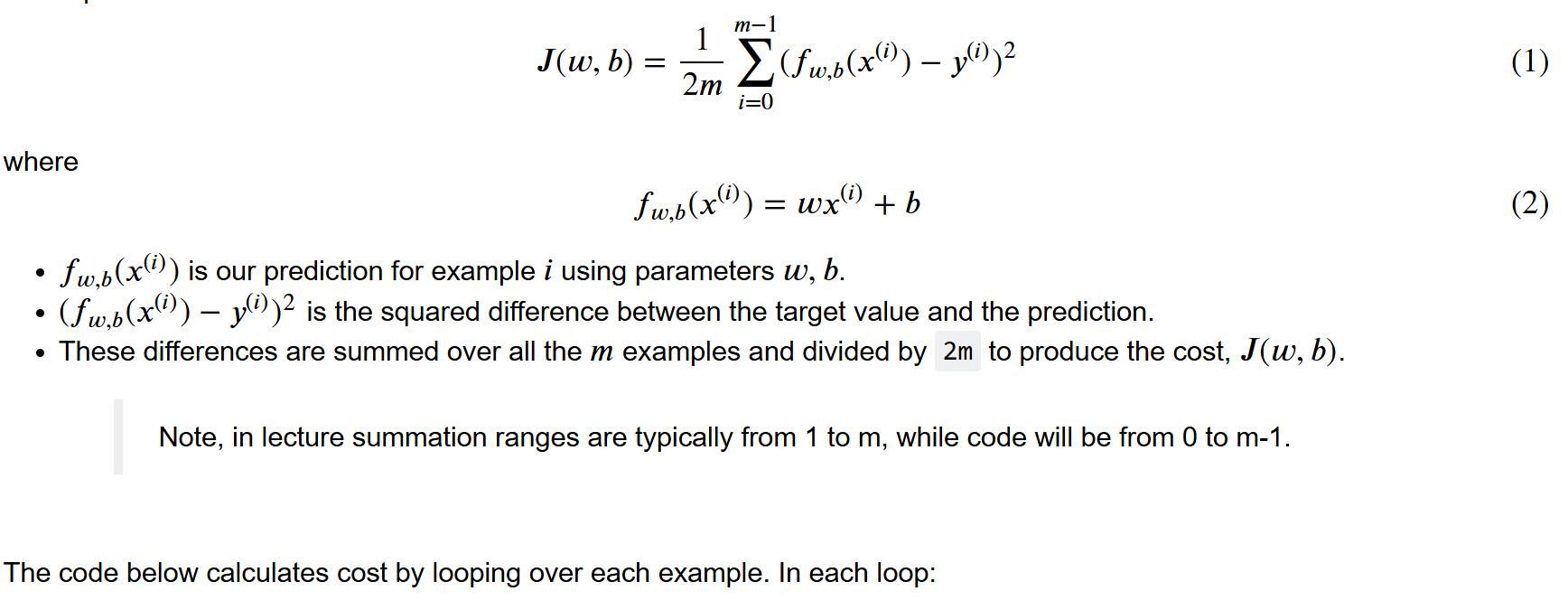

The equation for cost with one variable is:

$$J(w,b) = \frac{1}{2m} \sum\limits_{i = 0}^{m-1} (f_{w,b}(x^{(i)}) – y^{(i)})^2 $$

First we need to setup some background variables.

I will create an argument, named find_cost, in python with parameters x, y, w, b. Also I create a variable m inside the find cost argument to find the overall amount of training data in x.

def find_cost(x, w, y, b):

# This finds the overall number of training examples.

m = x.shape[0]

# This variable will append the sum of the squared errors from the later code.

# We start it at 0.

cost_sum = 0We can now being plugin in our above cost function equation. We start by solving for our predicted values

which would be:

$$f_{w,b}(x^{(i)}) $$

since

$$f_{w,b}(x^{(i)}) = wx^{(i)} + b$$

it would be this:

f_wb = w * x + b

$$((wx^{(i)} +b ) – y^{(i)})^2 $$

cost = (f_wb - y[i]) ** 2$$\sum\limits_{i = 0}^{m-1} ((wx^{(i)} +b ) – y^{(i)})^2 $$

Then we add all the squared errors up and append (+=) them into variable cost_sum

cost_sum += cost$$\frac{1}{2m} \sum\limits_{i = 0}^{m-1} ((wx^{(i)} +b ) – y^{(i)})^2 $$

total = (1 / (2 * m)) * cost_sumSo altogether we have:

def find_cost(x, w, y, b):

m = x.shape[0]

for i in range(m):

f_wb = w * x[i] + b

cost = (f_wb - y[i]) ** 2

cost_sum += cost

total = (1 / (2 * m)) * cost_sum

return total_cost

This above python code is equal to $$J(w,b) = \frac{1}{2m} \sum\limits_{i = 0}^{m-1} (f_{w,b}(x^{(i)}) – y^{(i)})^2 $$

This really helped! I’ll keep doing this in the future!

Leave a Reply